The three features in STRAT7’s segment chatbot that eliminate key problems in the use of GenAI in market research.

Welcome to our second blog on STRAT7’s new AI product – our segment chatbot.

To coin a phrase, many AI applications today have a ‘tourist’ problem.

They look good on demonstrations and use the usual buzzwords. But they often don’t solve real user problems and fail to gain traction.

While building our new product, we’ve faced similar perceptions and challenges. Here we explain what we’ve incorporated into our segment chatbot so that it reduces risk of stereotyping/ unintended bias, is clear on its sources and is data secure.

We believe this makes STRAT7’s segment chatbot a game-changing asset to your research process.

By working closely with our clients and STRAT7 colleagues to get feedback on various prototypes, we’ve embedded three design features to create a product of genuine value to insight and research teams.

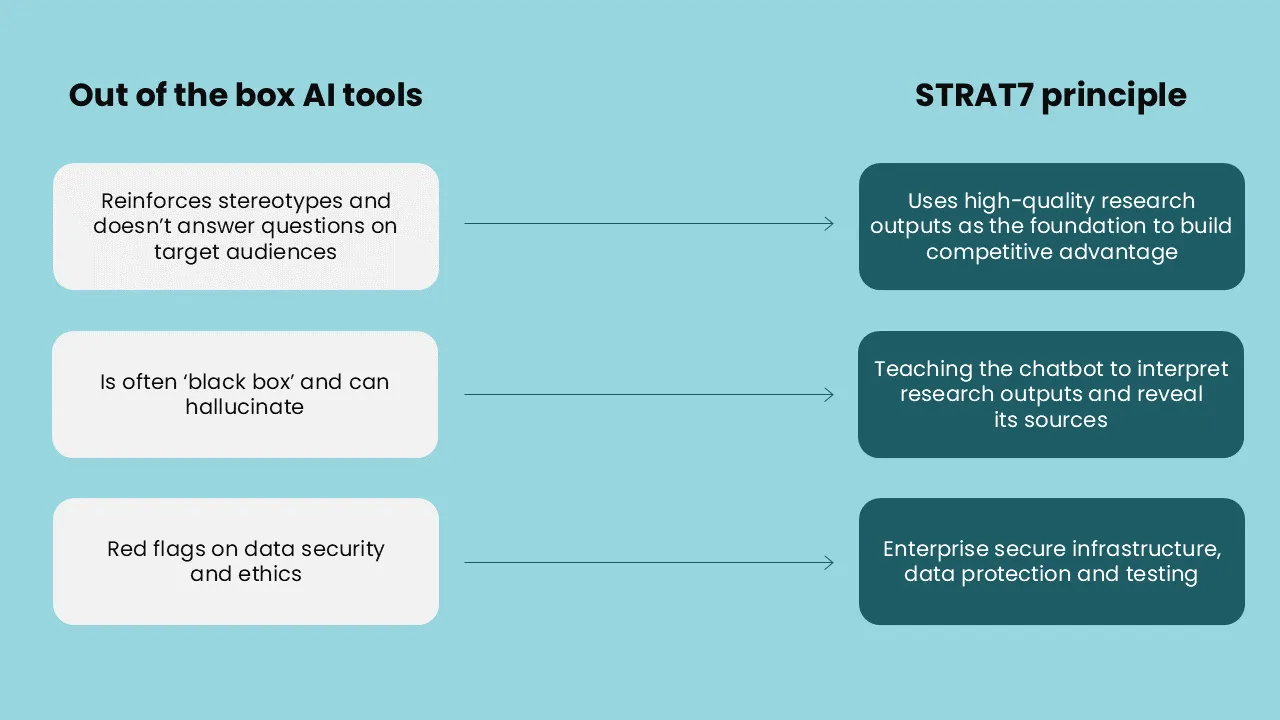

Solution #1: Stereotypes

As foundational Large Language Models (LLMs) are trained on ‘imbalanced’ datasets from the public internet, academic research has conclusively shown they can perpetuate bias and stereotypes.

While not a problem for table stake activities like summarising reports and helping with survey design, it can be a real issue when trying to use LLMs for more strategic tasks. Like predicting what concepts will appeal to what customers. Or tailoring a marketing CRM email to appeal to a certain group. Tools based on foundational LLMs alone will adapt what you give to them and push it in the direction of what is stereotypical, not based on the rich market research evidence that you hold.

The STRAT7 segment chatbot relies on your high quality research data as the foundation to inform its responses. While not eliminating all types of bias, it can certainly help to reduce it.

Solution #2: Black box and hallucinations

In user testing sessions, the two most common questions were:

‘How do I know what the chatbot is using to inform its response?’

‘How can I check if the chatbot is hallucinating or making things up?’

To address this, we built a feature that allows people to see exactly what sources it is using when retrieving its answer. Turning a black box tool into a glass box tool.

We also spent considerable time to get the chatbot to ‘understand’ market research deliverables – i.e. complex PPTs, PDFs and numeric data tables – so it parses the information correctly. We also have developed a ‘system prompt’ behind the scenes that tells the tool to say if it’s not sure of the answer, unless it is 100% sure of the accuracy (especially important for factual numerical questions).

This all helps reduce the likelihood of hallucinations and build trust in the tool.

Solution #3: Data security

By working closely with our partners at Amazon Web Services and our internal Information Security Team, we use a suite of models that are ‘enterprise secure’ in AWS Bedrock.

This means the prompts and data sent to the model are not used for model re-training and can be kept in a particular geographic zone (e.g. using data centres that don’t leave the EEA/UK regions for instance). This re-uses the same principles and learnings from our first AI product – strat7GPT.

Find out more

We believe these three solutions are the most important to establish our tool as different from ‘out of the box’ AI chatbot tools. STRAT7’s segment chatbot can carefully process and reveal the underlying sources, and how we ‘prime’ an LLM behind the scenes.

Feel free to contact us for a demonstration of how STRAT7’s segment chatbot can answer your research needs, or contact me directly:

Hasdeep Sethi

Data Science Director & STRAT7 AI Lead

STRAT7 Bonamy Finch

hasdeep.sethi@bonamyfinch.com

Infographic

Segment chatbot

Want to talk to customers, as if they were in the room with you?

View our segment chatbot infographic to find out more, including what it is and what it can be used for.